This post was originally published in Japanese in the past.

Table of Contents

- 1. Introduction

- 2. What is API Gateway?

- 3. What is Kinesis Data Firehose?

- 4. Set up API Gateway

- 5. Connect with Kinesis Data Firehose

- 6. Conclusion

1. Introduction

On October 16th, 2019, AWS released the news: Amazon API Gateway now supports access logging to Amazon Kinesis Data Firehose. In this post, we will try this feature to obtain access logs.

2. What is API Gateway?

Let's check what API Gateway is before we get starting.

Here is the official information:

Amazon API Gateway

Amazon API Gateway Features

It allows you to easily create, distribute, maintain, monitor, and protect API and acts as the "front door" for your REST API and WebSocket API. You can also associate it with services other than AWS, truly serving its purpose as a gateway for APIs.

3. What is Kinesis Data Firehose?

WafCharm users may have already set up Kinesis Data Firehose since WafCharm uses its functions for Reporting & Notification feature.

Below is the official information:

Amazon Kinesis Data Firehose

Amazon Kinesis Data Firehose features

This service loads streaming data to data stores and analytic tools almost in real time. You can load the data to Amazon S3, Amazon Redshift, Amazon Elasticsearch Service, Kinesis Data Analytics, and Splunk.

4. Set up API Gateway

API is not the main topic in this post, so we prepared something simple just to connect to Lambda.

We used the post below to set up API Gateway (the post is written in Japanese). Look up posts that guide you through the steps to set up API Gateway if necessary.

https://qiita.com/tamura_CD/items/46ba8a2f3bfd5484843f

5. Connect with Kinesis Data Firehose

In this post, we will send access logs to S3 via Kinesis Data Firehose.

We referred to the official information provided below.

Logging API calls to Kinesis Data Firehose

First, we need to prepare Kinesis Data Firehose.

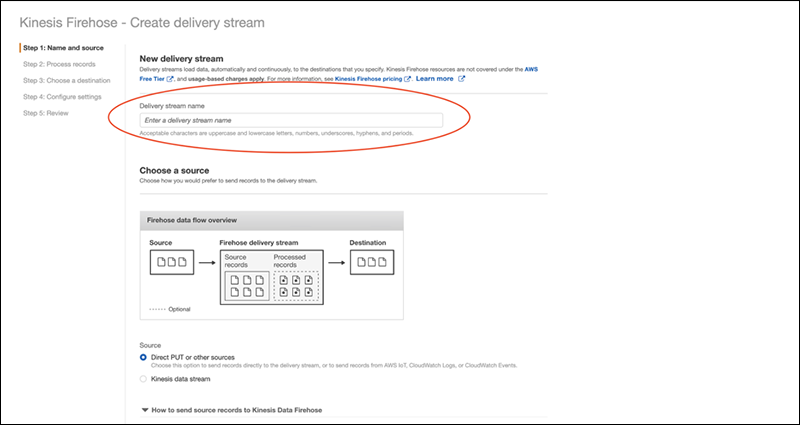

Open the Kinesis Data Firehose page after you've logged into AWS Management Console.

Then, click [Create delivery stream].

Next, enter the delivery stream name. There is a rule for a name if you are using it with API Gateway.

Make sure to follow the rule and enter the name as:

amazon-apigateway-{your-delivery-stream-name}

The other options can be left as default.

Continue with default setting for the next page.

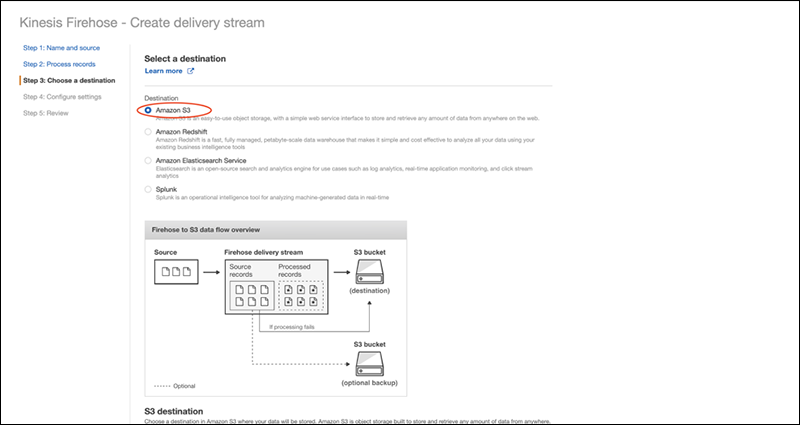

On the next page, you need to choose the destination for Kinesis Data Firehose.

We want to send logs to an S3 bucket in this blog, so we will be choosing Amazon S3 for the destination.

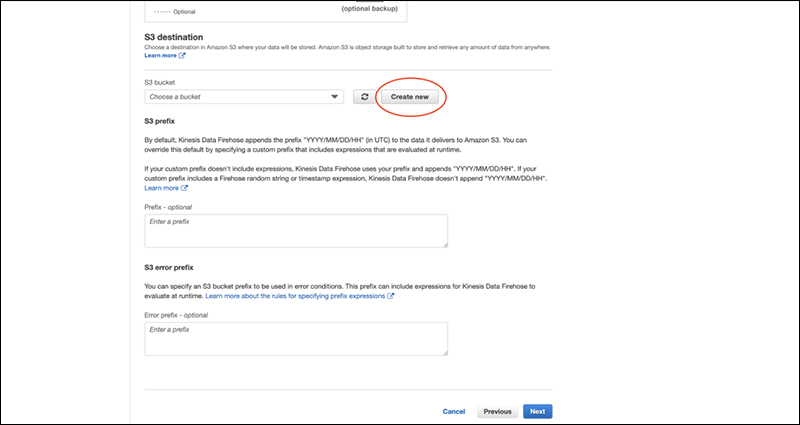

You will be asked to choose an S3 bucket, so click on [Create new] to make a new one.

You can leave the rest of the options empty.

*If you want to use the existing S3 bucket, select one from the pulldown menu.

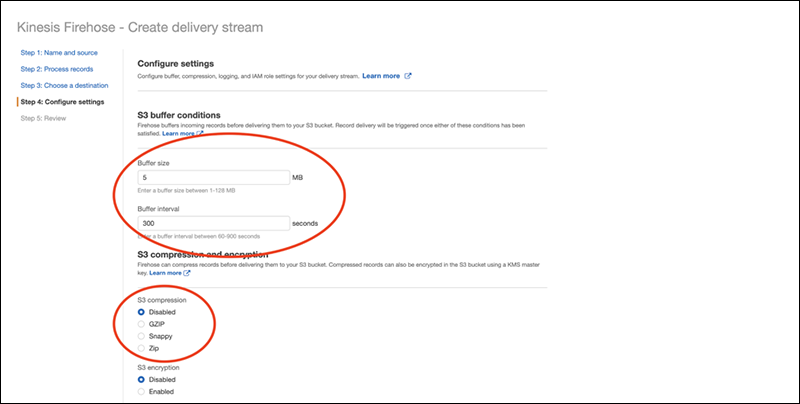

You can set Buffer size and Buffer interval on the next page.

Since this is for testing purposes, we will set the values to a minimum value which is 1MB / 60 seconds.

Select GZIP for S3 compression to lower the cost of S3.

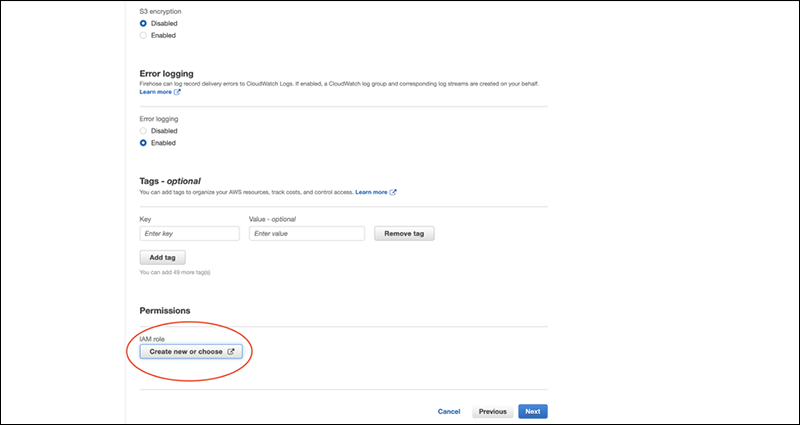

At the bottom of the page, create a new IAM role.

The given permissions are as below:

- CloudWatch Logs

- Glue

- Kinesis

- Lambda

- S3/li>

Continue on with the setting to complete the Kinesis Data Firehose settings.

Once you've completed it, let's associate it with API Gateway.

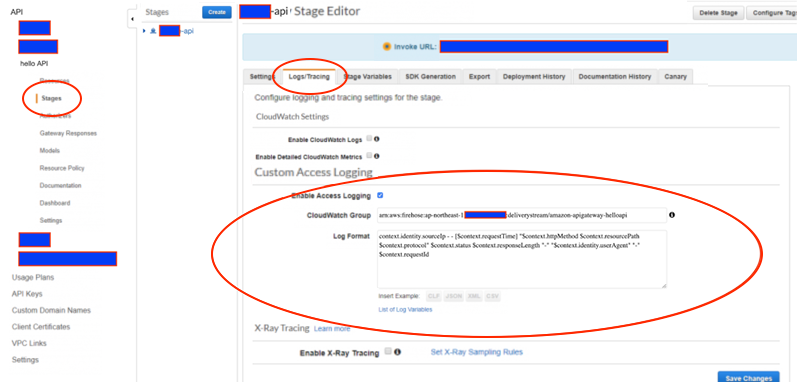

First, select the API Gateway you are using and click on the [Stages]. Then, click on the [Enable Access Logging] under the Custom Access Logging section of Logs/Tracing.

Next, enter the Kinesis Data Firehose Delivery stream ARN under [Access Log Destination ARN]. The format of ARN is as follows.

arn:aws:firehose:{region}:{account-id}:deliverystream:amazon-apigateway-{your-delivery-stream-name}

*It is the same as the Delivery stream ARN of Kinesis Data Firehose.

Lastly, enter the format of logs under the [Log Format]. You can choose from [CLF], [JSON], [XML], and [CSV].

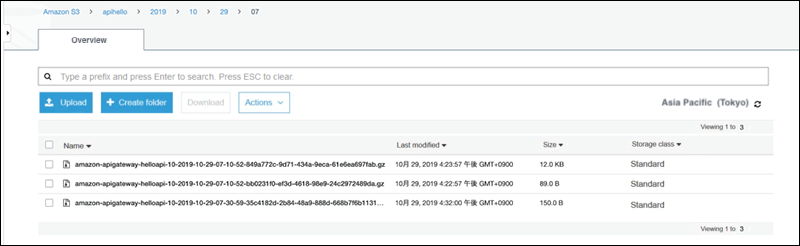

Once you've completed the configuration, you can see the files in S3.

The file creation time differs depending on the Buffer size/Buffer intervals you have set for Kinesis Data Firehose.

The format of default logs for CLF was as below.

It is easy to see because it is similar to familiar access logs.

Actual log

153.156.XXX.XXX - - [01/Nov/2019:02:XX:XX +0000] "GET /hello HTTP/1.1" 200 32 bb0afe31-edba-46a7-97c1-XXXXXXXXXXXX

Format

$context.identity.sourceIp $context.identity.caller $context.identity.user [$context.requestTime] "$context.httpMethod $context.resourcePath $context.protocol" $context.status $context.responseLength $context.requestId

* Formats can be customized. Refer to the list of variables in the official document. ($context.requestId is a required variable, so you cannot remove it.)

6. Conclusion

Obtaining logs has become more convenient with the use of Kinesis Data Firehose. You could utilize the logs for many things including API analysis. We also use access logs in WafCharm for analysis, but we are currently not compatible with API access logs, so we would like to consider it in the future.